Agentic AI Platform Privacy-Preserving AI

The Agentic AI Platform delivers a sovereign, privacy-preserving AI architecture built on confidential computing principles. Leveraging CPU and GPU Trusted Execution Environments (TEEs)—including Intel TDX, AMD SEV-SNP, and NVIDIA Confidential Computing—the system ensures that all data and AI workloads remain encrypted and isolated throughout processing. Each component, from confidential virtual machines to GPU-accelerated inference, operates within attested, hardware-protected environments that eliminate reliance on provider trust. Through federated identity, client-held encryption keys, and end-to-end encrypted data flows, Agentic AI Platform establishes verifiable technical assurance across the entire AI lifecycle. This architecture enables organizations to deploy and scale advanced AI services with full data sovereignty, confidentiality, and compliance by design.

1. Introduction

The Agentic AI platform represents a next-generation, privacy-preserving AI platform purpose-built for sovereign deployment. It unites confidential computing, federated authentication, and encrypted data orchestration into a single end-to-end trust framework. At its core, the system enforces technical assurance—verifiable, hardware-anchored protection of data in use, in motion, and at rest—removing the need to place trust in the infrastructure operator.

Unlike conventional cloud models that rely on procedural controls or provider-managed encryption, Agentic AI Platform embeds security directly in silicon. CPU and GPU Trusted Execution Environments (TEEs)—including Intel TDX, AMD SEV-SNP, IBM Secure Execution and NVIDIA Confidential Computing—establish hardware-backed isolation boundaries where code and data remain cryptographically protected, even from the hypervisor or cloud administrator. These foundations extend upward through confidential containers, federated single sign-on, and key-management frameworks designed for complete client custody of cryptographic material.

The result is a verifiably sovereign AI environment that enables confidential data processing, model execution, and information retrieval across hybrid or multi-tenant infrastructures—while ensuring that no plaintext data, credentials, or model artifacts are ever exposed outside attested, encrypted boundaries.

2. Design Philosophy: Technical Assurance

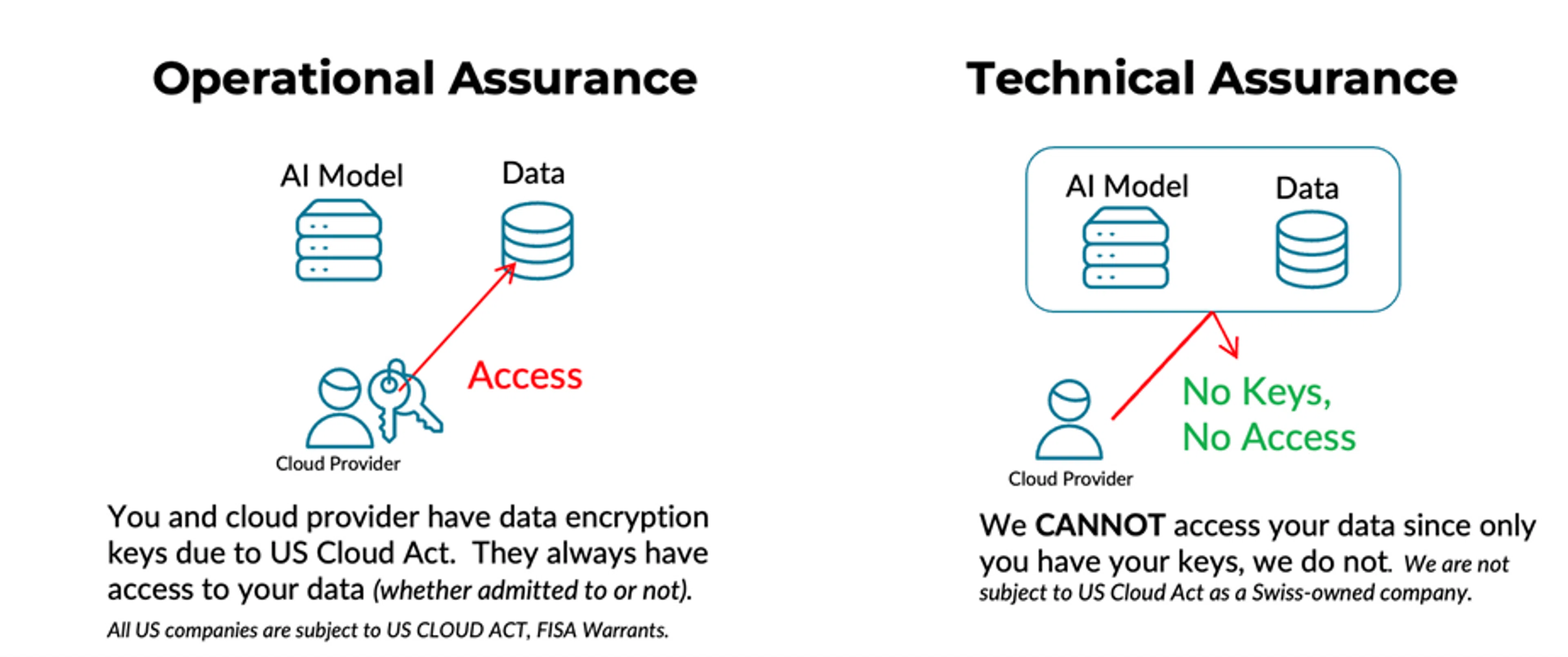

Many cloud providers rely primarily on operational assurance—that is, policies, processes, and administrative controls—to protect customer data. While these measures are necessary, they inherently depend on trust in the provider’s integrity and compliance posture. Under this model, the provider ultimately controls the infrastructure and often retains access to encryption keys, leaving a residual risk that data could be accessed—whether through internal privilege, misconfiguration, or legal compulsion under frameworks such as the U.S. CLOUD Act, illustrated in Figure 1.

The Agentic AI Platform architecture takes a fundamentally different approach, built on technical assurance rather than trust-based operational controls. As a Swiss-owned and operated platform, it is architected around client-controlled encryption, confidential computing, and hardware-enforced isolation. These cryptographic and silicon-level protections make it technically infeasible for even the infrastructure provider to access plaintext data. Clients retain exclusive control over their encryption keys, while all computation and data movement occur within attested, encrypted environments.

Figure 1: Operational vs. Technical Assurance

This philosophy is rooted in protecting data across its entire lifecycle—in transit, at rest, and in use—ensuring that sensitive workloads, AI models, and client data remain sovereign and confidential by design. The architecture that follows illustrates how this principle is implemented across the stack: from encrypted storage and retrieval-augmented generation (RAG) to GPU-based AI processing and client-facing orchestration, all secured through confidential computing and verified trust boundaries.

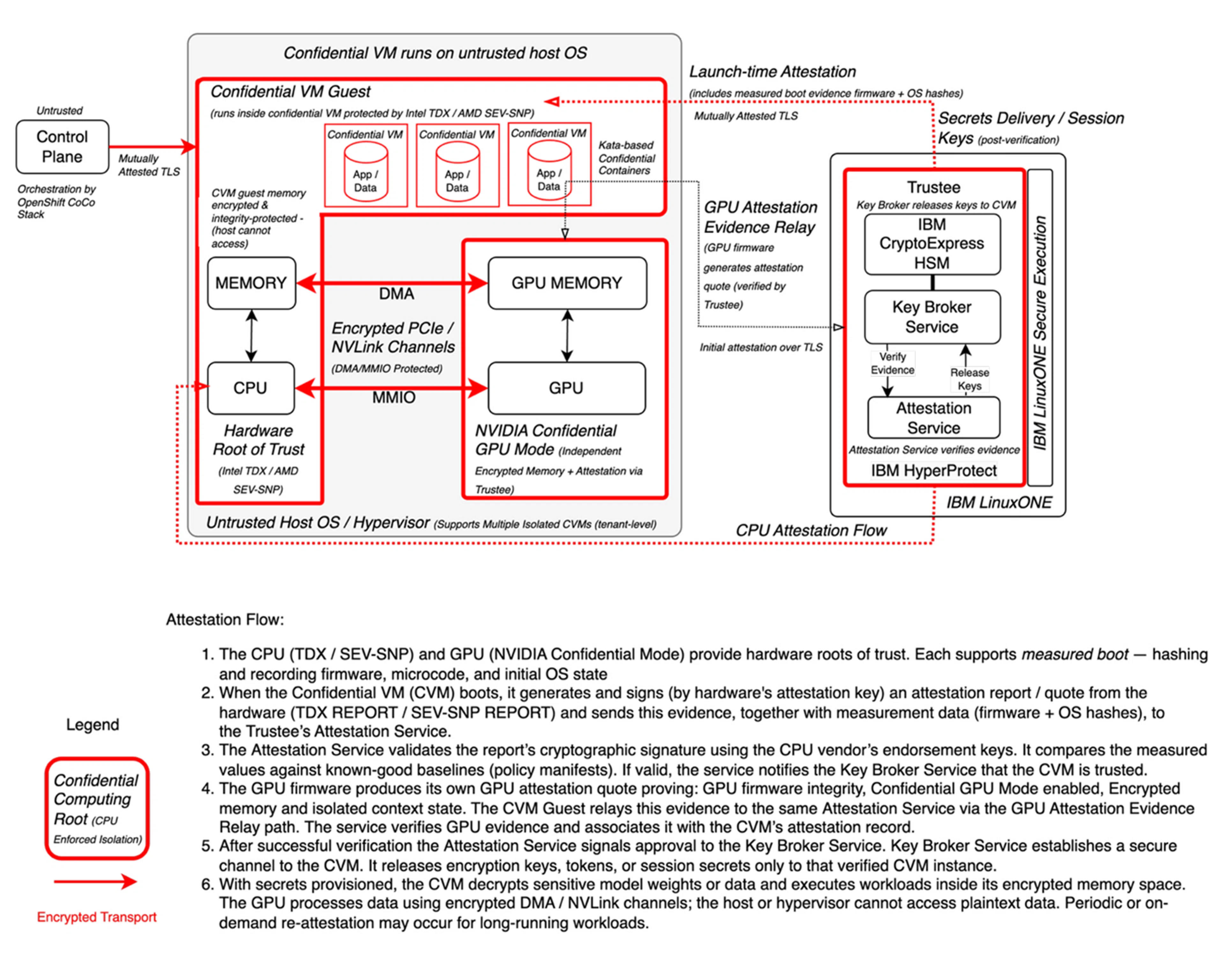

3. Phoenix Technologies Architecture for Technical Assurance

At the foundation of this architecture in Figure 2 lies confidential computing, enabled by CPU and GPU trusted execution environments (TEEs) that Phoenix Technologies is building in cooperation with IBM Research and Red Hat. Within the CPU domain, workloads execute inside secure confidential virtual machines, such as those provided by Intel TDX or AMD SEV, where both code and data are cryptographically isolated from the host operating system and hypervisor. The memory controller (MemCtrl) encrypts and decrypts all data as it moves between the CPU and DRAM, ensuring that even if an attacker gains physical access to memory, they can only view ciphertext. This layer of protection guarantees that computations on sensitive data remain confidential and tamper resistant.

Figure 2: Phoenix Technologies AG Target Confidential Computing Architecture

3.1. Trusted GPU Attestation with Confidential Computing

Complementing this, NVIDIA Confidential Computing mode (e.g., H100) establishes a GPU TEE with encrypted GPU memory and attested firmware. The GPU produces its own attestation evidence, which the CVM relays to the Trustee for verification. Attestation verifies that both the GPU and CPU are operating within trusted, uncompromised environments before secure computation begins.

When enabled, this confidential computing mode encrypts all data in GPU memory and secures communication between the CPU and GPU as part of a fully encrypted and attested compute path. GPUs participate directly in the trusted execution environment, running model inference, embedding generation, and other AI workloads entirely within encrypted GPU memory. Hardware-level memory protection ensures that model weights, intermediate results, and user data remain shielded from external access. Initial CVM attestation is sent to the Trustee over TLS. After verification, the Trustee/Key Broker issues short-lived credentials and subsequent exchanges use mTLS for key delivery and control-plane access. Together, these elements form an end-to-end confidential AI processing pipeline.

At the core of the trust architecture lies the Trustee, responsible for attestation verification, key brokering, and cryptographic operations. Unlike hyperscaler implementations, where attestation and key management services typically operate in standard, provider-controlled VMs, the Phoenix architecture deploys the Trustee inside IBM LinuxONE Secure Execution (SE) environments, creating a verifiable root of trust that extends across both hardware and software boundaries.

IBM LinuxONE Secure Execution provides a mainframe-class confidential computing environment that isolates entire virtual machines from the host operating system, hypervisor, and management stack. The underlying IBM Z hardware establishes a measured and verified boot chain, ensuring that the hypervisor, firmware, and all system components are cryptographically validated before any workload is launched. This prevents any form of host-level introspection or tampering with the Trustee environment.

IBM Hyper Protect Virtual Servers (HPVS) host the Trustee’s key management and attestation services. HPVS enforces strict isolation boundaries where both the guest OS and application memory remain protected at all times. The Trustee’s operating state and memory are thus cryptographically sealed and verifiable, guaranteeing the confidentiality and integrity of all attestation and key management operations.

The Trustee’s cryptographic operations are anchored in IBM CryptoExpress HSMs, FIPS 140-2 Level 4–certified hardware modules designed for tamper-proof key storage and in-hardware cryptographic processing. Keys are generated, stored, and used entirely within the HSM boundary, ensuring they are never exposed in software or memory. The HSMs support secure key export policies under a Keep Your Own Key (KYOK) model, aligning with the platform’s principle of client-controlled encryption.

IBM LinuxONE Secure Boot and its continuous chain of trust extend from firmware through the hypervisor and into guest images, producing verifiable measurement evidence. During launch, this evidence is included in the Trustee’s own attestation, allowing independent validation that the Trustee itself is running on untampered, cryptographically verified infrastructure. This ensures that Phoenix’s attestation and key management services are themselves confidential-computing workloads, a fundamental architectural distinction in contrast to hyperscalers—whose attestation and key broker services typically operate in untrusted, provider-managed domains.

3.2. New Chip-Level Encrypted Link Technologies

A key advancement in this design is the secure I/O path connecting the CPU and GPU. Historically, even with confidential computing technologies like SEV or TDX, the PCIe and CXL interconnects represented vulnerabilities where data could be intercepted or tampered with. The Agentic AI Platform infrastructure mitigates this through a combination of new hardware security standards. The Security Protocol and Data Model (SPDM) establishes link-level encryption and authentication to protect traffic across PCIe and CXL, while Trusted DMA I/O (TDIO) enforces encryption and key isolation for all DMA transactions, preventing peripheral devices from accessing host memory. PCI-SIG TDISP provides device attestation for PCIe/CXL provides hardware-backed attestation between devices, ensuring that only verified GPUs, NICs, or accelerators can exchange encrypted data with the host. GPU quote is generated by GPU firmware and relayed by the CVM to the Attestation Service. Together, these technologies close the long-standing gap between CPU and GPU trust boundaries, delivering a fully secure, attested data path across the compute stack.

3.3. Confidential Containers and Key Management for Applications

Above the hardware root of trust—anchored in CPU-based technologies such as Intel TDX or AMD SEV-SNP—operates the Confidential VM Guest (CVM), which provides a secure execution substrate for confidential workloads. This layer ensures that data in use remains encrypted and isolated from the host operating system, hypervisor, and cloud management stack, preserving the confidentiality and integrity of all computations.

The Confidential VM Host Environment runs Kata-based confidential containers, included in RedHat OpenShift Sandbox, which combine the lightweight agility of containers with the strong isolation guarantees of hardware-backed virtual machines. Each container instance executes within its own CVM, inheriting hardware-level protections while maintaining compatibility with existing container orchestration frameworks such as Kubernetes. Attestation mechanisms verify the integrity and trustworthiness of the environment before any sensitive workloads or cryptographic keys are provisioned.

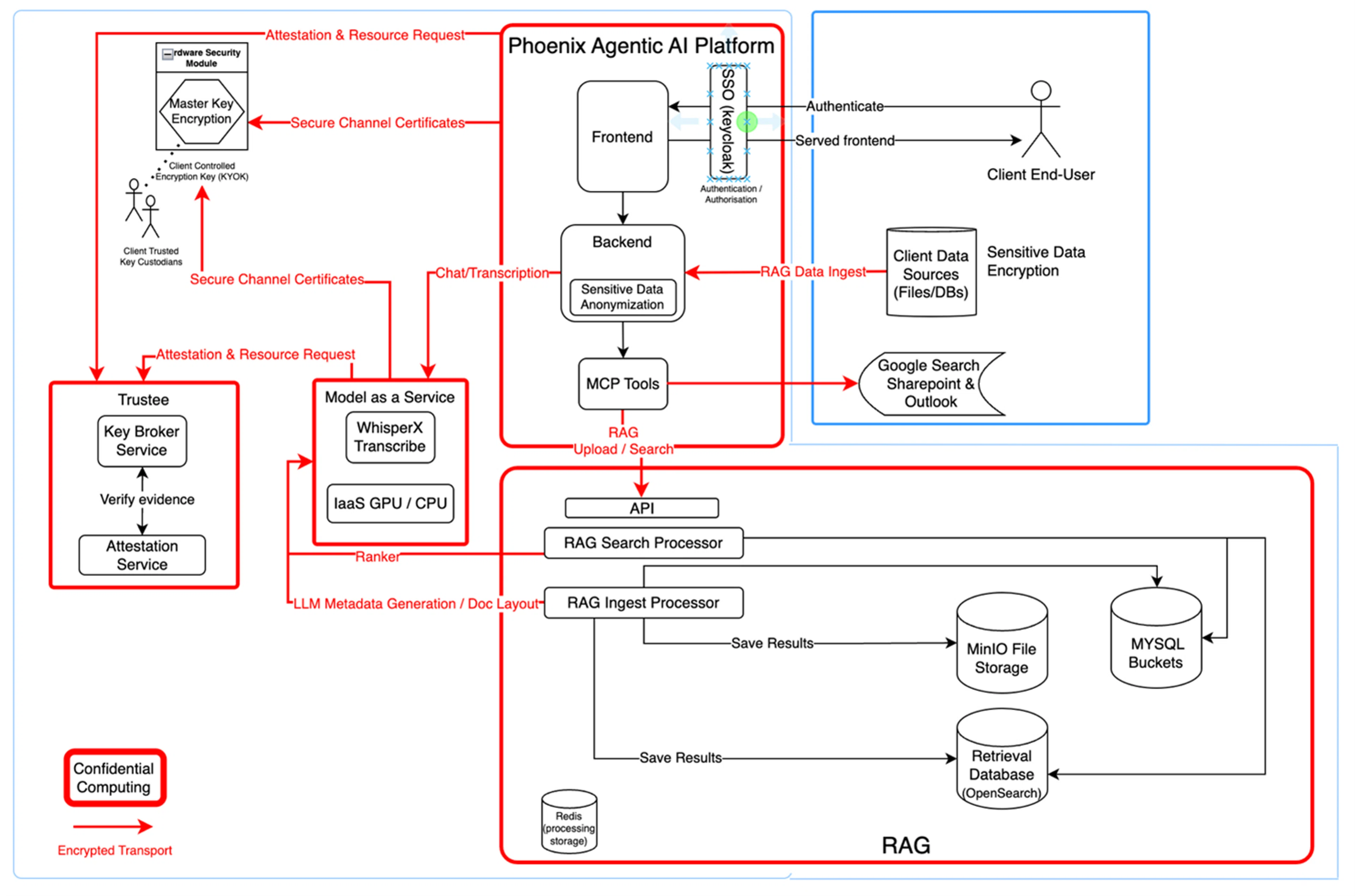

4. Agentic AI Platform Confidential Computing

The Agentic AI Platform manages authentication, authorization, and data access through a federated single sign-on (SSO) architecture built on Keycloak as illustrated in Figure 3. For example, in a typical deployment, Keycloak can be federated with a client’s Microsoft Entra ID using an OpenID Connect (OIDC) trust. In such a configuration, Entra ID serves as the Identity Provider and Keycloak as the relying party, allowing users to authenticate with their enterprise credentials while the client retains full control over access and MFA policies. Keycloak validates the Entra-issued tokens and establishes its own sessions and access tokens for downstream services, ensuring secure, standards-based authentication without ever handling user passwords.

Figure 3: Agentic AI Platform Confidential Computing Architecture

The Agentic AI Platform exposes user-facing APIs for retrieval-augmented generation (RAG), transcription, and chat, while enforcing TLS connections across all communications. Client-controlled encryption keys and hardware security modules (HSMs) protect master keys under a “Keep Your Own Key” (KYOK) model, ensuring that clients—not the platform provider—retain ultimate control over their data and cryptographic material. Middleware components, referred to as model context protocol (MCP) tools, facilitate secure data exchange with external client systems such as SharePoint and Outlook, while maintaining the encrypted state of sensitive information throughout its lifecycle.

The Model-as-a-Service (MaaS) subsystem provides confidential AI model execution, such as transcription with WhisperX or large language model (LLM) inference. These workloads execute within Kata-based Confidential Containers, leveraging hardware attestation to verify integrity before deployment. GPU and CPU compute resources are allocated within confidential enclaves, ensuring that model inputs, outputs, and intermediate states remain private. Communication between this service layer and the broader platform occurs over encrypted transport channels secured with TLS, extending confidentiality guarantees even during high-performance, distributed AI processing.

At the heart of the data intelligence workflow is the RAG (Retrieval-Augmented Generation) subsystem. This layer ingests, processes, indexes, and retrieves client data for AI-enhanced search and reasoning. The RAG Ingest Processor securely receives data from client sources including files, databases, or communication systems and then processes and stores it in encrypted MinIO object stores, MySQL and OpenSearch retrieval databases. The RAG Search Processor performs semantic retrieval and ranking, drawing on metadata and embeddings generated by confidential LLMs. All intermediate results are cached in encrypted Redis storage, ensuring that no plaintext data is ever exposed outside of the trusted enclave environment. The API layer connects this subsystem to the Agentic AI Platform, allowing for seamless RAG upload and query operations under strict confidentiality.

On the client side, end-users and data sources operate within an encrypted boundary. Client data remains encrypted both in storage and during ingestion into the Sovereign Infrastructure, protected by client-held keys. Data from SharePoint, Outlook, or other repositories flows through the MCP connectors, where it is securely processed and indexed for retrieval while maintaining encryption throughout the transfer and ingestion process.

Within the Agentic AI Platform environment, all user and client data are subject to strict sensitive-data treatment before any external interaction occurs. A confidential redaction pipeline, running entirely within the back-end’s TEE, detects and removes personally identifiable information (PII), internal identifiers, cryptographic materials, and proprietary terms using open-source frameworks extended with custom recognizers for domain-specific patterns. Once sanitized, any required external lookup is performed through a MCP Google Search connector operating within the same confidential infrastructure. This connector communicates directly with Google’s Programmable Search API over encrypted TLS channels, using platform-controlled credentials under client key custody. No plaintext user data, identifiers, or contextual metadata ever leave the enclave; all outbound queries are abstracted, anonymized, and policy-verified before egress, and search results are re-encrypted upon return for in-enclave processing. This architecture enables secure access to public web knowledge while preserving complete data confidentiality and sovereignty.

5. Conclusion

The Agentic AI Platform demonstrates how confidential computing can transform AI into a truly sovereign service. From the silicon root of trust to attested application layers, every component of the stack participates in a continuous chain of verification and encryption. CPU and GPU TEEs enforce isolation at the hardware level; encrypted I/O channels eliminate traditional exposure points between devices; and confidential containers guarantee that AI workloads run only in verified, policy-compliant environments.

Through federated identity, hardware-based attestation, and client-controlled key management, the system provides mathematically provable assurance that neither operators nor third parties can access client data or models. Combined with retrieval-augmented generation, confidential model execution, and in-enclave data governance, Agentic AI Platform delivers an end-to-end architecture for secure, private, and compliant AI operations.